AI security – what could possibly go wrong?

Can AI be hacked? Of course it can! AI security is here and we need to do something about it.

AI is everywhere in the news nowadays, saving and dooming the world in equal measure. But what about AI security? There’s a lot of discourse around the potential of LLMs like ChatGPT in particular, while AI has already managed to beat humans at video games, driving, scientific image analysis, and maybe even coding. But – as with any fast-moving technology with a lot of money involved – the hackers will always ask a question even if we don’t: “what could possibly go wrong?”

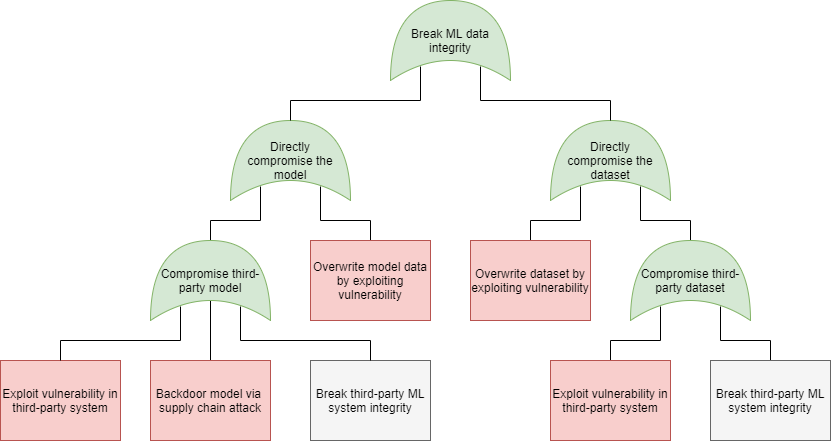

An absolutely critical element of these modern AI systems is machine learning: training models on real data so they can generalize from that data and make the right decisions even if presented with previously unseen data. And in these systems, training data tends to start at “whatever we find on the Internet”. So, is the security of machine learning keeping up with the breakneck pace of AI development as a whole? Unfortunately, the answer is “not really”. With the right tools and knowledge, an attacker can exploit the differences between AI and human perception & understanding to trick AI into perceiving a 3D-printed turtle toy as a rifle, corrupt an AI model to prescribe lethal blood thinner doses to patients and hide inaudible commands in audio to trigger backdoors in speech recognition systems, and that’s just the tip of the iceberg. These attacks (‘evasion’ and ‘poisoning’) have been explored as early as the mid-2000s and have been demonstrated against modern AI systems for over 10 years now. Mitigations exist, but they are not sufficiently powerful against motivated attackers – and the currently hyped LLMs are even more vulnerable to some of these attacks. Similarly, attacks exist that allow an attacker to steal a (valuable) machine learning model from a system, extract data from the training set (hopefully the training data didn’t include any personal data or credit card numbers!), or even reconstruct the images or text used to train the model in the first place.

But there’s more to AI security than these sophisticated attacks. Ultimately, an AI is just a program, and its code can have bugs and security flaws just like everything else, and there are many novel threats (such as prompt hacking) that can break AI safeguards put in place by a developer. And there’s a lot of third-party code involved – any kind of AI system makes use of thousands of dependencies and hundreds of thousands of lines of code – all potentially filled with regular ‘boring’ vulnerabilities like integer and buffer overflows (just look at the triple-digit number of vulnerabilities discovered in TensorFlow every year). More importantly, the supply chain used by these systems is also open to attacks – and are surprisingly easy to infect with backdoors that’ll do the attacker’s bidding. Just how much do you trust that third-party model or model zoo?

Finally, we have to talk about the security of code written by AI – which can be manipulated and corrupted just like any other application. For example, an attacker can exploit ‘package hallucination’: observe what kind of package names are made up by ChatGPT when it’s writing code, and upload a malicious package with that name to a public package repository as a kind of typosquatting and dependency confusion hybrid attack. And of course, code written by these systems builds on existing (typically open source) code written by humans, which can be very prone to vulnerabilities – which means the generated code will contain the same vulnerabilities – or worse yet, backdoors! Garbage in, garbage out.

I have talked about this topic back in 2020, too – things have changed quite a bit since then, sometimes for the better and sometimes for the worse. Let’s see how many ways someone can break your favorite AI at WeAreDevelopers World Congress 2023!