The ECU killer: broken authentication in automotive security

The most severe vulnerability in automotive security is broken authentication. Let’s take a closer look at the reasons.

Automotive security is a relatively new area, even though some attacks against ECUs go back to the early 2000s. The first paper demonstrating a practical attack against a vehicle was 2010’s Experimental Security Analysis of a Modern Automobile, but it was Miller and Valasek’s live 2015 Jeep takeover hack (which resulted in Chrysler recalling 1.4 million Jeeps to address the vulnerability) that really opened the floodgates and made the public recognize car hacking as a real problem.

Since 2015, security folks have been paying a lot more attention to automotive security. Almost every year brought serious vulnerabilities to light. Some examples are the Tesla Model S wireless interface (2017), the Volkswagen Golf GTE and Audi A3 Sportsback infotainment system (2018), and the Toyota Display Control Unit (2020). Two hackers actually won Tesla Model 3s after successfully attacking the car’s web browser in 2019’s Pwn2Own hacker competition.

Of course, automotive security is evolving quickly and many of these attacks were on older models (typically mid- to late 2010s). However, considering that the average age of a car on the road is over 10 years, “old” vulnerabilities and “outdated” design will stay relevant for quite a while.

The Car Hacking Village is an automotive security community that focuses on this topic. One of the talks they hosted in the 2020 DEF CON 28 conference was Realistic Trends in Vulnerability based on Hacking into Vehicle by NDIAS Ltd (see slides here). This presentation summarized the results of numerous security testing projects, with over 40 evaluated ECUs and 300 identified vulnerabilities. These numbers are big enough to identify some trends in automotive security. Unsurprisingly, most of the identified vulnerabilities (37%) were in infotainment software. Infotainment systems also had the highest proportion of high and medium-risk vulnerabilities. Let’s take a look at what types of vulnerabilities were the most common – and most damaging.

The State of the Pwn in automotive security

The security evaluation methodology described by NDIAS highlights the importance of in-depth code analysis as opposed to black-box interface testing, both in number (the former being twice as effective as the latter) and severity (with code analysis typically finding more serious problems). This highlights the difference of efficacy between in-depth security evaluation and penetration testing – and the usefulness of code review.

When looking at high-risk vulnerabilities, NDIAS found a 70/30 split between the environment and the application. And when looking at only medium- and high-risk vulnerabilities, the most populous vulnerability category was insecure authentication. This category encompasses several typical issues: use of insecure authentication protocols, hardcoded secret keys, and use of weak randomness.

Thus, a common problem is failing to authenticate requests properly. At first glance, this may not look like a huge issue – after all, the ECUs are not supposed to be accessible from the outside. So how can an attacker exploit these vulnerabilities?

Perhaps surprisingly, NDIAS has found that even though it was possible to exploit 58% of local vulnerabilities over the CAN bus, it was not the most important target yet. In fact, the UDS protocol was much more valuable – 83% of the discovered medium- and high-risk vulnerabilities could be exploited through it. Let’s take a closer look.

UDS and its inherent (in)security

UDS (Unified Diagnostic Services, defined in ISO 14229) is a protocol stack used alongside CAN. While the latter provides physical and data link layer services, UDS is implemented in the session and application layers. Since it is intended to diagnose and fix problems with ECUs, there is typically no network segmentation for UDS messages. It is also important to note that UDS can be used over a wireless connection instead of CAN, thus potentially opening up some of these vulnerabilities to external attackers.

Of course, diagnostics functionality like this should not be accessible by just anyone. Thus, there is a field (Security Access, 0x27) that can be used to facilitate an authentication process for protected actions:

- The client sends a request to access a protected function that requires a certain security level.

- The server responds with a “seed” value appropriate for the security level.

- The client generates a “key” value using the “seed” and sends it back to the server.

- The server also generates a “key” value from the “seed”, and checks whether it matches the one sent by the client. If it does, the authentication is successful, and the client will gain the security level they asked for.

There’s nothing wrong with the specification itself – it’s standard challenge-response authentication that can be secure if the parameters are chosen correctly (see e.g. SCRAM defined in RFC 5802). However, the standard does not define the implementation details. It’s up to the developer to figure out the length of the “seed”, how the “seed” is generated, and how the “key” can be derived from it. This is a typical security engineering scenario, and not only in automotive security: the question is not whether it can work well (it does!), but rather if it’s used correctly. It can be tempting to go for a more simplistic implementation (just use a constant seed or a weak pseudorandom number generator!), or even to come up with some proprietary ‘secret’ algorithm for calculating the key, and hope the attacker doesn’t learn it (which goes against the basic principles of cryptographic design by relying on security by obscurity). Note that while the AUTOSAR standards define several possible controls to make this authentication method more secure – such as logging failed attempts and enforcing a delay between them – they do not seem to have any specific requirements towards the seed or the key.

The NDIAS report has identified multiple common weaknesses due to incorrect Security Access implementation. Some ECUs had the secret value hard coded, so replicating the key generation was trivial for an attacker by reverse engineering the firmware. Others used a static seed and/or a weak or predictable pseudorandom number generator (PRNG), which all made the process vulnerable to replay attacks. And there were even some ECUs that didn’t use Security Access at all, allowing anyone to read sensitive data as long as they could send a UDS message to the ECU.

Let’s see a real-world example of an ECU using weak authentication!

When even the automotive security standard gets it wrong: airbag detonation made easy

In Security Evaluation of an Airbag-ECU by Reusing Threat Modeling Artefacts (Dürrwang et al, 2017), a group of researchers from the University of Applied Sciences Karlsruhe have identified a weakness in ISO 26021-3 (Road vehicles – End-of-life activation of on-board pyrotechnic devices – Part 3: Tool Requirements), the ISO standard that specifies how the Security Access service should be implemented specifically for the airbag control unit when the airbag has reached its end of life and needs to be detonated.

The standard defines the implementation through an example using a 2-byte seed – which is rather short and vulnerable to brute forcing. Worse yet, the first byte is fixed as the version number of the standard, meaning that the seed is reduced to a single meaningful byte, i.e. 256 possible values! So, if a developer implemented the ECU according to the specification, the authentication would turn out to be very weak. But to make things even worse, the example generates the key by simply taking the bitwise inverse of the seed. An excerpt of the paper shows a successful authentication process observed by the researchers:

7F1 [SF] ln: 2 data: 27 5F 7F9 [SF] ln: 4 data: 67 5F 01 E3 7F1 [SF] ln: 4 data: 27 60 FE 1C 7F9 [SF] ln: 2 data: 67 60

The second message contains the seed – we can see that the first byte is indeed fixed at the version number of the protocol (01), and so we have only eight bits of entropy. The third message contains the key, which in this case is a simple inverse of the seed. Based on this knowledge, an attacker can trivially authenticate to this ECU – no secrets are needed!

But even if we assume that the developers didn’t follow the example to the letter (and used, say, an XOR with a secret 16-bit value instead of simply inverting the bits), the seed and key were so short that an attacker could trivially brute force the key. ISO 26021 did not mandate any delays or throttling, so going through all 65535 possibilities would not take too long.

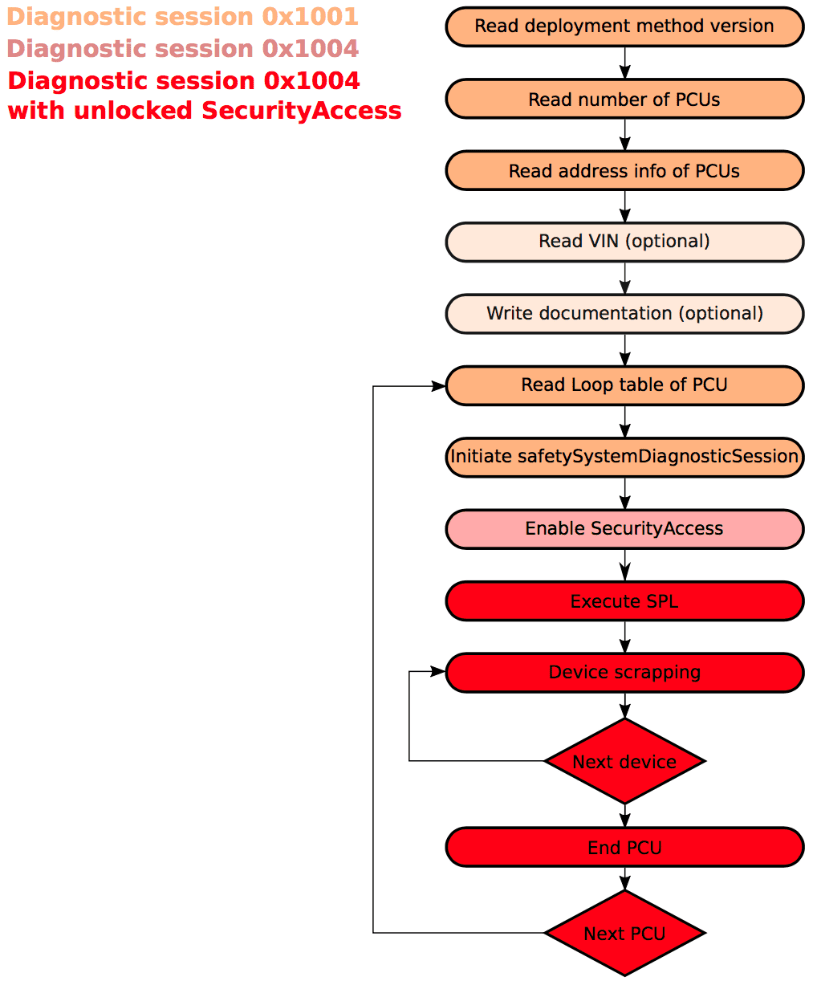

The image below shows the workflow used by the researchers to detonate the airbags in cars manufactured after 2014 (PCU stands for Pyrotechnic Control Unit, i.e. the airbag ECU). The only necessary condition is that the ignition for the vehicle needs to be turned on and the vehicle must be moving slower than 6 km/h (around 4 mph).

This weakness in the standard was recognized as CVE-2017-14937, instructing manufacturers to implement more secure authentication than the example exhibited in the standard. What can we learn from this?

Rule #1 of crypto: “Don’t do this at home!”

As always with cryptography, trying to create cryptographic algorithms from scratch is a very bad idea – doubly so if we’re relying on security by obscurity. It’s much better to use well-established standards and best practices instead. In the area of authentication, this means using TPMs (trusted platform modules) and HSMs (hardware security modules) to manage secrets such as pre-shared keys. But even more importantly, when implementing an open-ended challenge response algorithm such as the one used for Security Access in UDS, it’s preferable to use an existing solution that protects message integrity, confidentiality, and authenticity. As an example, an HMAC with a pre-shared key would fit any automotive security scenario as well.

Another consideration is what key length to use when implementing Security Access for UDS. Even if the seeds are generated with a strong PRNG, the algorithm used to generate a key for a seed is good, and the system makes use of enforced delays on failed authentication attempts, 16-bit values can be feasibly brute forced over time. Consider that there are only 65536 possible key values for a certain seed. A patient attacker may decide to build a full look-up table for each possible seed value either by observing how other ECUs authenticate to the target ECU (keep in mind that CAN has no protection against

eavesdroppers!). The attacker can also spoof (impersonate) the target ECU to another one and systematically give it all possible seeds to obtain the appropriate response keys for each. By increasing the seed and key length to 128 bits, we can make this type of attack unfeasible. And of course, appropriate rate limitation must be enforced, ultimately resulting in Exceeded Number of Attempts (0x36) and Required Time Delay Not Expired (0x37) responses to an attempted brute force attack.

Of course, it can be argued that once an attacker has gained access to the CAN bus, it’s all over – but remember that NDIAS identified wireless access options for the UDS functionality of many of these ECUs. And even assuming that these vulnerabilities could only be exploited by a local attacker, it’s important to consider the principle of defense in depth – having proper authentication in place would either prevent a successful attack entirely or make the attacker’s job considerably harder. This is reflected in the modern Zero Trust Architecture concept (basically: authenticate and authorize every request, even if it’s sent from the local network) that has been quickly gaining traction among automotive security experts. The approach has also been standardized by NIST recently.

It is also important to note, that – while we focused on authentication problems – NDIAS has also found many other types of vulnerabilities. The ones highlighted in their presentation were disabled TLS certificate validation, TOCTTOU to bypass signature validation, outdated libraries, buffer overflow, directory traversal, improper error handling, and insecure sensitive data management.

The good news is that in our courses, you can understand in detail how these vulnerabilities can affect your code, and what you can do about them. We teach software developers how NOT to code. Focusing specifically on automotive security, Secure coding in C and C++ for automotive adds essential awareness that all developers should have about the environment in which they develop code. With all discussed secure coding issues we directly link to the relevant parts of guidelines and standards (such as MISRA C or SEI CERT C and C++ coding standards). Alongside gaining specific software security skills, on the course you will also see a number of real-world case studies from the automotive sector, similar to the topic of this article.