Hardware security – the lowest level of security

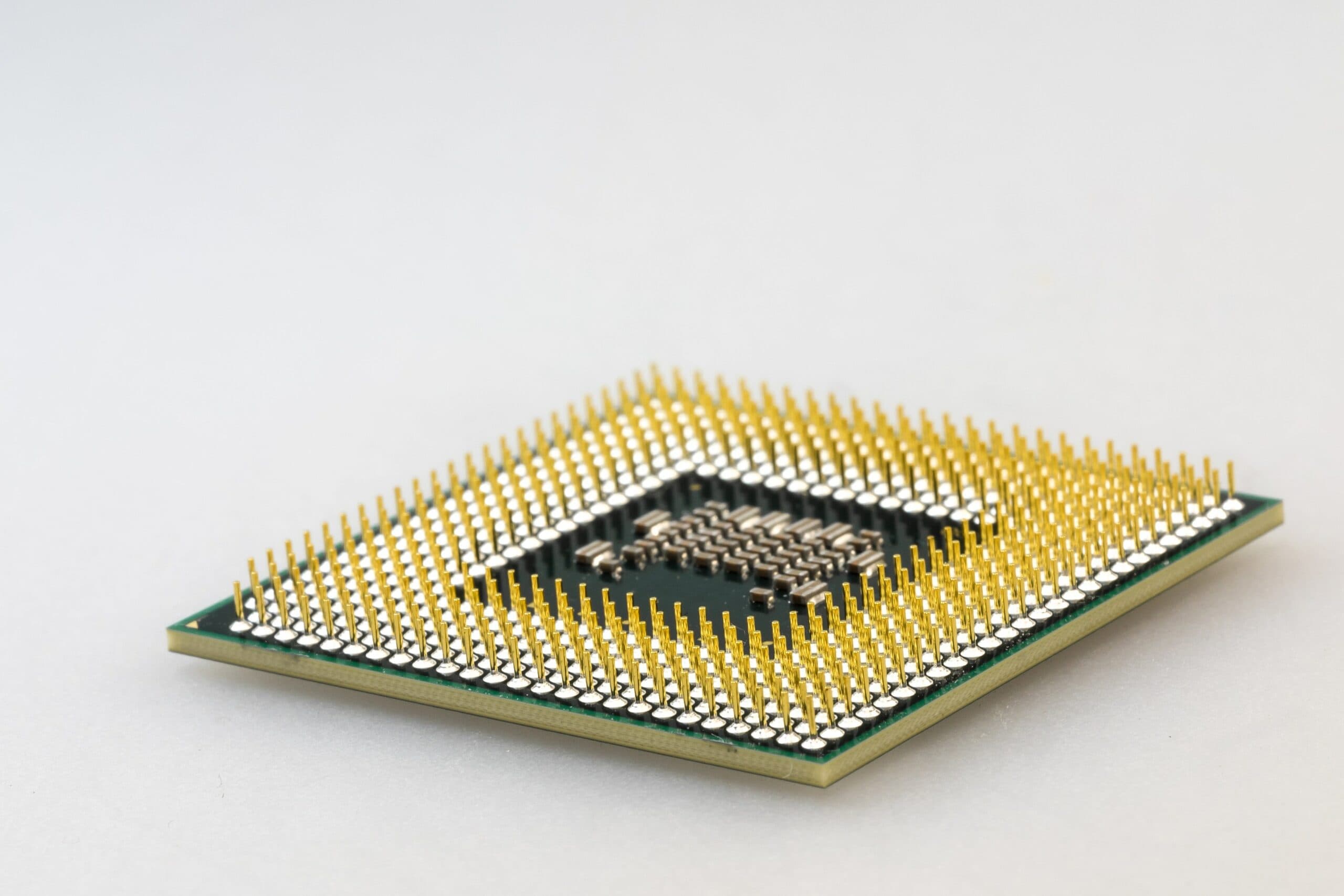

Security is not just about software. The hardware parts like CPU or memory of modern devices can also be vulnerable.

Hardware security is an emerging issue as there are more and more devices around us. More and more devices that connect to the rest of the world. More and more devices which are not complicated enough to warrant a fully blown computer. Just think about home automation, intelligent light switches, heating, door locks, security cameras. Even ordinary toys are getting intelligent. Yet these devices are lacking essential security, while they may have some resources attracting attackers. So, just as any other computer system, they must be designed and implemented with security in mind.

Do you know what your CPU is doing?

Long gone are the times where a transistor radio contained only a couple of stand-alone transistors, resistors, capacitors, and coils. Modern hardware consists of millions and billions of integrated elements.

While the first circuits – like the transistor radios sold in the 50s – contained less than 10 transistors, the Intel 4004 processor released in 1971 contained 2250 transistors, the 8080 released in 1978 contained around 29 thousand and the infamous Pentium processor released in 1993 had over 3 million. But, as always, with great power comes great responsibility. More complexity – be it hardware or software – brings more possibilities to make a mistake. And this brings hardware security to the table. To quote the well-known Murphy’s laws: “Anything that can go wrong will go wrong”.

The first hardware problem that made it to the headlines in 1994 was the FDIV bug that was present in the early versions of 60–100 MHz P5 Pentiums of Intel. This bug was caused by some missing values in a lookup table resulting in incorrect results when dividing certain pairs of numbers.

4 195 835 / 3 145 727 = 1.333820449136241002 (the correct value)

4 195 835 / 3 145 727 = 1.333739068902037589 (incorrect value due to the calculation error of the FDIV bug)

You may think that this is not a huge difference; most users would not even notice it. But under certain circumstances – like enumerating prime numbers – these small discrepancies may have added up and caused entirely wrong results, potentially causing even hardware security consequences. The extent of the risk was much disputed at the time, and still today we are not sure if it was “really an issue” at all from security point of view.

Not much later, in 1997, an anonymous post to an Internet newsgroup warned users of another problem, the so-called F00F bug, affecting all P5 series processors.

This time, the root cause of the bug was the CMPXCHG8B <OP> instruction, which normally compares and exchanges the contents of the EDX and EAX registers together (4+4=8 bytes) with 8 bytes at an arbitrary memory location specified in <OP>. If the operand was a register, the instruction failed and threw an exception (since it can’t compare 8 bytes to 4). But if the lock prefix was also used for the instruction – meant to make instructions atomic (not interruptible) – it caused the processor to freeze execution. The invalid opcodes were in the range of 0F C7 C8-CF and the lock prefix was F0, thus the first two bytes of the offending opcode were F0 and 0F, hence the name F00F bug. After the processor executed this specific instruction, it froze. After that, only a hard reset helped, which – obviously – could cause distraction and possible data loss.

Since then, the complexity of even the simplest smart device has become several thousand times of that of a PC with a Pentium processor. Imagine the possibilities of a bug sneaking into the design on hardware level. It’s true that both the design and the testing tools and methods evolved since then, revealing many of the inadvertent mistakes that went unnoticed before. However, it is also relatively easy to put a hardware backdoor maliciously into a processor, and that is really hard to find.

Some more recent hardware security issues – Meltdown and Spectre

Two of more recent vulnerabilities exploiting weaknesses in processor design are Meltdown and Spectre, both from 2017.

Meltdown exploits side-channel information available on most microprocessor architectures since 2010. It allows an attacker – who can run code on the vulnerable processor – to dump the entire kernel address space. This happens by abusing the performance feature called “out-of-order execution”.

Out-of-order execution works by looking ahead of the currently executed instruction and preparing for the next steps to better utilize the CPU’s resources. This, however, can have unwanted side-effects on vulnerable processors. Even before Meltdown, side-effects – like timing differences – were exploited to leak information. One attack in particular (Flush+Reload) could lead to information leakage through a cache side channel by measuring memory access time to detect if a particular piece of information was cached or not.

The Meltdown hardware security vulnerability invalidated all security guarantees of memory isolation on personal computers and even servers hosted in the cloud. One could harvest any secret in any process and in any operating system, even inside virtual machines. Hence the name “Meltdown”: the attacker could melt down hardware-enforced security boundaries.

Unlike Meltdown, Spectre didn’t break the isolation between user applications and the OS level but made it possible to leak information between applications. The name Spectre comes from “speculative execution”, a feature which allows the processor to “predict” the next steps to speed up execution. When the prediction turns out to be wrong, everything is undone, except that the cache will hold precalculated data based on the predicted information, maybe some sensitive information, such as a cryptographic key. This information could be read in a similar way as with Meltdown.

But the bug can be not only in the CPU doing the computation. Even the memory used can be vulnerable. The so-called rowhammer vulnerability (or ‘row hammer’ – in two words – as it was actually coined by Intel in some patents while the linked paper was being written) is possible because of the side-effects of high integration. Its name is coming from the way it works. Memory is constructed out of rows and columns. When neighboring rows are “hammered” (rewritten again and again) with a specific pattern, that can change the value of the targeted memory row. This way an adversary can modify the memory to contain the desired code or data.

See also this recent paper analyzing real-world susceptibility of modern DRAM chips to Rowhammer attacks, proving that it’s still a hardware security problem relevant today.

Obviously, the more complex a system is, the more chances for an unforeseen vulnerability. And, as always, for an attacker it is often enough to find one single problem they can exploit to orchestrate a complex attack.

The access of doom

It’s simple. If an attacker has access to a system, they can most likely find ways to elevate their privileges to do more harm. This is also true when it comes to hardware security.

For example, all controls of an airplane are in the cockpit – except when there is a “Seat Electronic Box” installed under the seat giving physical access to the network. These connections are there to make the maintenance of the airplane easier for the technicians but can also be abused by an attacker to take over the internal network of the airplane – including the computer controlling the flight. A security researcher did just that in 2015.

Missing or broken authentication is a common hardware security issue in automotive security. On a physical level, some cars allow physical access to the CAN Bus from the outside due to a design flaw. For example, the pedestrian warning speakers on some electric cars – located near the bumpers – could be hooked up to a tiny purpose-built device to intercept and generate CAN Bus traffic. An attacker could plant this device in seconds while the vehicle is parked, and then get direct access to read and modify the data sent between the different controllers (ECUs) of the vehicle. This is a kind of “Evil Maid” attack: similar to a situation when you have your electronics accessible to cleaning personnel, who can exchange it unnoticed for a maliciously modified device, which is otherwise identical to the naked eye. For example, one can unlock the car, set it in motion, accelerate or brake a car, move the steering, or change the direction. All through data sent through a few wires accessible by anybody. And let’s not forget about debug and test ports that can be found on most modern boards. These ports enable easier testing of the hardware but can also give an attacker a way to access the system.

When looking at hardware security, side-channel attacks are often ignored. A system like the one to be attacked can be mapped out by analyzing the power consumption or the timing of the operations or even the electromagnetic radiation it emits, and use the information learned (e.g., algorithms or secret keys used) to carry out the attack. When the attacker has access to the hardware, they can use invasive side-channel attacks as well, e.g., rowhammer, to change the content of protected memory space as described previously.

Apart from side channel attacks, it is worth mentioning that memory doesn’t lose its content the moment power is switched off. Therefore, there is also the possibility to recover some secrets with a “cold boot attack”. In this scenario the attacker resets the device, and as a consequence of that, the device dumps its memory to a persistent storage, which is then accessible by the adversary. Once again: this dumped memory can contain sensitive information, like secret keys, tokens or similar.

Is there anything I can do?

Just as always, the best defense against attacks is proactivity. Design and build your devices with security in mind. For example, UEFI (Unified extensible Firmware Interface) can protect against “Evil Maid” attacks and some HW manufacturers like ARM are making their CPUs to be resistant against Spectre and Meltdown. You can also disable JTAG, use full memory encryption, use hardware security modules, use an OS that protect against Meltdown/Spectre via kernel page table isolation, and so on, and so forth… you get the picture.

In a follow up article, we will go more into details about what makes software vulnerabilities, their exploitation, and protection measures different in the embedded. In the meantime, you can also learn more about secure software design and implementation practices on one of our courses, and secure software development in the embedded world in C and C++ – check out our course catalog for more details.